Objective

To identify the person on a real time camera wearing a face mask or not with the help of Convolutional neural network by using the keras library.

Introduction

COVID-19 virus has affected the whole world very badly and It has a huge impact on our everyday life. One major protection method for the people is to wear masks in public areas. Doctors recommend that wearing masks is made to play a big role to stop the spread of the virus even if vaccines are available. Which will help to control the spreading of the virus. In crowded areas manually Detection of face masks is an extremely challenging task for the present. Hence we are going to build a model which helps to identify the person whether the person is wearing a mask or not. This model is very helpful to identify the person who is not wearing a mask in crowded areas like malls, bus stands, and other public places.

Methodology

Dataset :

The dataset which we have used consists of 1376 total images out of which 690 are of masked faces and 686 are of unmasked faces. Most of them are artificially created. Which does not represent the real world accurately. From all the three sources, the proportion of the images is equal. The proportion of masked to unmasked faces determine that the dataset is balanced. We need to split our dataset into three parts: training dataset, test dataset and validation dataset. The purpose of splitting data is to avoid overfitting which is paying attention to minor details/noise which is not necessary and only optimizes the training dataset accuracy. We need a model that performs well on a dataset that it has never seen (test data), which is called generalization. The training set is the actual subset of the dataset that we use to train the model. The model observes and learns from this data and then optimizes its parameters. The validation dataset is used to select hyperparameters (learning rate, regularization parameters). When the model is performing well enough on our validation dataset, we can stop learning using a training dataset. The test set is the remaining subset of data used to provide an unbiased evaluation of a final model fit on the training dataset.

Architecture :

CNN architecture is inspired by the organization and functionality of the visual cortex and designed to mimic the connectivity pattern of neurons within the human brain.The neurons within a CNN are split into a three-dimensional structure, with each set of neurons analyzing a small region or feature of the image. In other words, each group of neurons specializes in identifying one part of the image. CNNs use the predictions from the layers to produce a final output that presents a vector of probability scores to represent the likelihood that a specific feature belongs to a certain class.

The architecture of a CNN is a key factor in determining its performance and efficiency. The way in which the layers are structured, which elements are used in each layer and how they are designed will often affect the speed and accuracy with which it can perform various tasks.

Build a CNN model :

Import some important libraries for building the CNN mode.

Code snippet:

import cv2,os

import numpy as np

from keras.utils import np_utils

from keras.models import Sequential

from keras.layers import Dense, Activation, Dropout, Conv2D, Flatten, MaxPooling2D

from keras.callbacks import ModelCheckpoint

from sklearn.model_selection import train_test_split

from matplotlib import pyplot as plt

from keras.models import load_modelThe dataset consists of 1376 images. With masks and without masks. Provide the data path for reading the dataset. Categorized the data with mask and without mask with their name and Make a list for store data and target. Store all the images in the ‘data’ list and label of images stored in ‘target’ list.

Code Snippet :

#use the file path where your dataset is stored

file_loc = 'dataset'

categories = os.listdir(file_loc)

labels = [i for i in range(len(categories))]

label_dict = dict(zip(categories,labels))

print(label_dict)

print(categories)

print(labels)

img_size = 32

data = []

target = []

for category in categories:

folder_path = os.path.join(file_loc,category)

img_names = os.listdir(folder_path)

for img_name in img_names:

img_path = os.path.join(folder_path,img_name)

img = cv2.imread(img_path)

try:

gray = cv2.cvtColor(img,cv2.COLOR_BGR2GRAY)

resized = cv2.resize(gray,(img_size,img_size))

data.append(resized)

target.append(label_dict[category])

except Exception as e:

print("Exception: ",e)Output :

Now the list which is formed data and target converts it into a numpy array for the data processing. Change the shape of the data array so that it can be given to neural network architecture as an input and save the npy file.

Code snippet :

#data values are normalized

data = np.array(data)/255.0

#reshaping of data

data = np.reshape(data,(data.shape[0],img_size,img_size,1))

target = np.array(target)

new_target = np_utils.to_categorical(target)

#saving the files

np.save('data',data)

np.save('target',new_target)In this step we will load the data from the file that we created in the previous step. After that we are building the Convolutional Network Model using the Convolutional and Max Pooling layers.

Code snippet :

# Data load

data = np.load('data.npy')

target = np.load('target.npy')

# build Convolutional neural network leyar

model = Sequential()

model.add(Conv2D(100,(3,3),input_shape=data.shape[1:]))

model.add(Activation('relu'))

model.add(MaxPooling2D(pool_size=(2,2)))

model.add(Conv2D(100,(3,3)))

model.add(Activation('relu'))

model.add(MaxPooling2D(pool_size=(1,1)))

model.add(Conv2D(100,(3,3)))

model.add(Activation('relu'))

model.add(MaxPooling2D(pool_size=(1,1)))

model.add(Conv2D(100,(3,3)))

model.add(Activation('relu'))

model.add(MaxPooling2D(pool_size=(1,1)))

model.add(Conv2D(100,(3,3)))

model.add(Activation('relu'))

model.add(MaxPooling2D(pool_size=(2,2)))

model.add(Conv2D(400,(3,3)))

model.add(Activation('relu'))

model.add(MaxPooling2D(pool_size=(1,1)))

model.add(Flatten())

model.add(Dropout(0.5))

model.add(Dense(50,activation='relu'))

model.add(Dense(2,activation='softmax'))

# Compile Data

model.compile(optimizer='adam', loss='categorical_crossentropy', metrics = ['acc'])At last, the output is flattened and fed into a fully connected dense layer with 50 neurons and a final layer with 2 neurons. It will predict based on probabilities for a person wearing a mask or not.

Split the data and target and fit into the model. The data and target are split into training and testing data. Keeps 10 % of data as testing and 90% as training data.

Code snippet :

# Split Data

train_data, test_data, train_target, test_target = train_test_split(data, target, test_size=0.1)

Create a checkpoint, which will save the model, which will have the minimum validation loss. Then the training data is then fitted in the model so that predictions can be made in the future.

Code snippet :

# Create Checkpoints and model fit

checkpoint=ModelCheckpoint('model-{epoch:03d}.model', monitor='val_loss', verbose = 0, save_best_only = True,mode='auto')

history = model.fit(train_data,train_target,epochs = 20, callbacks = [checkpoint], validation_split = 0.2)After fitting the model, plot the accuracy and loss chart for training and validation data.

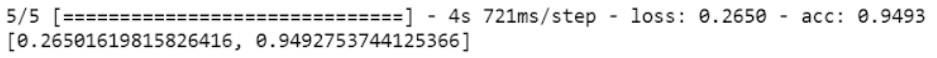

Now evaluate the model on test data. We can see that the model is about 94% accurate on testing data with 0.26 loss.

Output :

Using the model in real time through a webcam. Load the best model through a call back. Now we will use the HaarCascade Classifier to find the face in each frame of the video. Make a label and color dictionary for two different classes. i.e Fask and No Mask.

Code snippet :

img_size=32

#load the best model

model = load_model('model-018.model')

faceCascade=cv2.CascadeClassifier('haarcascade_frontalface_default.xml')

#starts the webcam

video_capture = cv2.VideoCapture(0)

labels_dict = {0:'NO MASK',1:'MASK'}

color_dict = { 0:(0,0,255),1:(0,255,0)}

In an infinite loop, capture each frame from the video stream and convert it into grayscale for better processing and apply the cascade to find the ROI, in our case- faces . Resize and normalize each ROI image and give it to the model for prediction. Through this you will get the probability for both mask and no mask. The one with higher probability is selected. A frame is drawn around the face, which also indicates whether a person has worn a mask or not. To close the webcam, press the Esc button.

Code snippet :

while(True):

ret,frame = video_capture.read()

gray = cv2.cvtColor(frame,cv2.COLOR_BGR2GRAY)

faces = faceCascade.detectMultiScale(gray,1.3,5)

for x,y,w,h in faces:

face_img = gray[y:y+w,x:x+h]

resized = cv2.resize(face_img,(img_size,img_size))

normalized = resized/255.0

reshaped = np.reshape(normalized,(1,img_size,img_size,1))

result = model.predict(reshaped)

label = np.argmax(result,axis=1)[0]

cv2.rectangle(frame,(x,y),(x+w,y+h),color_dict[label],2)

cv2.putText(frame,labels_dict[label],(x,y-10),cv2.FONT_HERSHEY_SIMPLEX,0.8,(255,255,255),2)

cv2.imshow('Video',frame)

key=cv2.waitKey(1)

if(key==27):

break;

cv2.destroyAllWindows()

video_capture.release()The model has now been fully prepared and is ready to identify the person on a real time camera wearing a face mask or not with the help of Convolutional neural network

Comments